OpenAI facts for kids

|

|

Former headquarters at the Pioneer Building in San Francisco

|

|

| Industry | Information technology |

|---|---|

| Founded | December 10, 2015 |

| Headquarters | San Francisco, California, U.S. |

|

Key people

|

|

| Products |

|

| Revenue | |

|

Number of employees

|

c. 770 (November 20, 2023) |

OpenAI is an American artificial intelligence (AI) research organization consisting of the non-profit OpenAI, Inc. registered in Delaware and its for-profit subsidiary OpenAI Global, LLC. OpenAI researches artificial intelligence with the declared intention of developing "safe and beneficial" artificial general intelligence, which it defines as "highly autonomous systems that outperform humans at most economically valuable work". OpenAI has also developed several large language models, such as ChatGPT and GPT-4, as well as advanced image generation models like DALL-E 3, and in the past published open-source models.

The organization was founded in December 2015 by Ilya Sutskever, Greg Brockman, Trevor Blackwell, Vicki Cheung, Andrej Karpathy, Durk Kingma, Jessica Livingston, John Schulman, Pamela Vagata, and Wojciech Zaremba, with Sam Altman and Elon Musk serving as the initial board members. Microsoft provided OpenAI Global LLC with a $1 billion investment in 2019 and a $10 billion investment in 2023.

On November 17, 2023, the board removed Altman as CEO, while Brockman was removed as chairman and then resigned as president. Four days later, both returned after negotiations with the board, and most of the board members resigned.

Participants

Key employees:

- CEO and co-founder: Sam Altman, former president of the startup accelerator Y Combinator

- President and co-founder: Greg Brockman, former CTO, 3rd employee of Stripe

- Chief Scientist and co-founder: Ilya Sutskever, a former Google expert on machine learning

- Chief Technology Officer: Mira Murati, previously at Leap Motion and Tesla, Inc.

- Chief Operating Officer: Brad Lightcap, previously at Y Combinator and JPMorgan Chase

Board of the OpenAI nonprofit:

- Bret Taylor (chairman)

- Lawrence Summers

- Adam D'Angelo

Individual investors:

- Reid Hoffman, LinkedIn co-founder

- Peter Thiel, PayPal co-founder

- Jessica Livingston, a founding partner of Y Combinator

Corporate investors:

- Microsoft

- Khosla Ventures

- Infosys

Motives

Some scientists, such as Stephen Hawking and Stuart Russell, have articulated concerns that if advanced AI someday gains the ability to re-design itself at an ever-increasing rate, an unstoppable "intelligence explosion" could lead to human extinction. Co-founder Musk characterizes AI as humanity's "biggest existential threat".

Musk and Altman have stated they are partly motivated by concerns about AI safety and the existential risk from artificial general intelligence. OpenAI states that "it's hard to fathom how much human-level AI could benefit society," and that it is equally difficult to comprehend "how much it could damage society if built or used incorrectly". Research on safety cannot safely be postponed: "because of AI's surprising history, it's hard to predict when human-level AI might come within reach." OpenAI states that AI "should be an extension of individual human wills and, in the spirit of liberty, as broadly and evenly distributed as possible." Co-chair Sam Altman expects the decades-long project to surpass human intelligence.

Vishal Sikka, the former CEO of Infosys, stated that an "openness" where the endeavor would "produce results generally in the greater interest of humanity" was a fundamental requirement for his support, and that OpenAI "aligns very nicely with our long-held values" and their "endeavor to do purposeful work". Cade Metz of Wired suggests that corporations such as Amazon may be motivated by a desire to use open-source software and data to level the playing field against corporations such as Google and Facebook which own enormous supplies of proprietary data. Altman states that Y Combinator companies will share their data with OpenAI.

Strategy

Musk posed the question: "What is the best thing we can do to ensure the future is good? We could sit on the sidelines or we can encourage regulatory oversight, or we could participate with the right structure with people who care deeply about developing AI in a way that is safe and is beneficial to humanity." Musk acknowledged that "there is always some risk that in actually trying to advance (friendly) AI we may create the thing we are concerned about"; nonetheless, the best defense is "to empower as many people as possible to have AI. If everyone has AI powers, then there's not any one person or a small set of individuals who can have AI superpower."

Musk and Altman's counter-intuitive strategy of trying to reduce the risk that AI will cause overall harm, by giving AI to everyone, is controversial among those who are concerned with existential risk from artificial intelligence. Philosopher Nick Bostrom is skeptical of Musk's approach: "If you have a button that could do bad things to the world, you don't want to give it to everyone." During a 2016 conversation about technological singularity, Altman said "we don't plan to release all of our source code" and mentioned a plan to "allow wide swaths of the world to elect representatives to a new governance board". Greg Brockman stated "Our goal right now... is to do the best thing there is to do. It's a little vague."

Conversely, OpenAI's initial decision to withhold GPT-2 due to a wish to "err on the side of caution" in the presence of potential misuse has been criticized by advocates of openness. Delip Rao, an expert in text generation, stated "I don't think [OpenAI] spent enough time proving [GPT-2] was actually dangerous." Other critics argued that open publication is necessary to replicate the research and to be able to come up with countermeasures.

More recently, in 2022, OpenAI published its approach to the alignment problem. They expect that aligning AGI to human values is likely harder than aligning current AI systems: "Unaligned AGI could pose substantial risks to humanity and solving the AGI alignment problem could be so difficult that it will require all of humanity to work together". They explore how to better use human feedback to train AI systems. They also consider using AI to incrementally automate alignment research.

OpenAI claims that it's developed a way to use GPT-4, its flagship generative AI model, for content moderation — lightening the burden on human teams.

Products and applications

As of 2021[update], OpenAI's research focused on reinforcement learning (RL). OpenAI is viewed as an important competitor to DeepMind.

Gym

Announced in 2016, Gym aims to provide an easily implemented general-intelligence benchmark over a wide variety of environments—akin to, but broader than, the ImageNet Large Scale Visual Recognition Challenge used in supervised learning research. It hopes to standardize how environments are defined in AI research publications, so that published research becomes more easily reproducible. The project claims to provide the user with a simple interface. As of June 2017, Gym can only be used with Python. As of September 2017, the Gym documentation site was not maintained, and active work focused instead on its GitHub page.

RoboSumo

Released in 2017, RoboSumo is a virtual world where humanoid metalearning robot agents initially lack knowledge of how to even walk, but are given the goals of learning to move and pushing the opposing agent out of the ring. Through this adversarial learning process, the agents learn how to adapt to changing conditions; when an agent is then removed from this virtual environment and placed in a new virtual environment with high winds, the agent braces to remain upright, suggesting it had learned how to balance in a generalized way. OpenAI's Igor Mordatch argues that competition between agents can create an intelligence "arms race" that can increase an agent's ability to function, even outside the context of the competition.

Video game bots and benchmarks

OpenAI Five

OpenAI Five is a team of five OpenAI-curated bots used in the competitive five-on-five video game Dota 2, that learn to play against human players at a high skill level entirely through trial-and-error algorithms. Before becoming a team of five, the first public demonstration occurred at The International 2017, the annual premiere championship tournament for the game, where Dendi, a professional Ukrainian player, lost against a bot in a live one-on-one matchup. After the match, CTO Greg Brockman explained that the bot had learned by playing against itself for two weeks of real time, and that the learning software was a step in the direction of creating software that can handle complex tasks like a surgeon. The system uses a form of reinforcement learning, as the bots learn over time by playing against themselves hundreds of times a day for months, and are rewarded for actions.

By June 2018, the ability of the bots expanded to play together as a full team of five, and they were able to defeat teams of amateur and semi-professional players. At The International 2018, OpenAI Five played in two exhibition matches against professional players, but ended up losing both games. In April 2019, OpenAI Five defeated OG, the reigning world champions of the game at the time, 2:0 in a live exhibition match in San Francisco. The bots' final public appearance came later that month, where they played in 42,729 total games in a four-day open online competition, winning 99.4% of those games.

OpenAI Five's mechanisms in Dota 2's bot player shows the challenges of AI systems in multiplayer online battle arena (MOBA) games and how OpenAI Five has demonstrated the use of deep reinforcement learning (DRL) agents to achieve superhuman competence in Dota 2 matches.

GYM Retro

Released in 2018, Gym Retro is a platform for reinforcement learning (RL) research on video games. Gym Retro is used to research RL algorithms and study generalization. Prior RL research focused mainly on optimizing agents to solve single tasks. Gym Retro gives the ability to generalize between games with similar concepts but different appearances.

Debate Game

In 2018, OpenAI launched the Debate Game, which teaches machines to debate toy problems in front of a human judge. The purpose is to research whether such an approach may assist in auditing AI decisions and in developing explainable AI.

Dactyl

Developed in 2018, Dactyl uses machine learning to train a Shadow Hand, a human-like robot hand, to manipulate physical objects. It learns entirely in simulation using the same RL algorithms and training code as OpenAI Five. OpenAI tackled the object orientation problem by using domain randomization, a simulation approach which exposes the learner to a variety of experiences rather than trying to fit to reality. The set-up for Dactyl, aside from having motion tracking cameras, also has RGB cameras to allow the robot to manipulate an arbitrary object by seeing it. In 2018, OpenAI showed that the system was able to manipulate a cube and an octagonal prism.

In 2019, OpenAI demonstrated that Dactyl could solve a Rubik's Cube. The robot was able to solve the puzzle 60% of the time. Objects like the Rubik's Cube introduce complex physics that is harder to model. OpenAI did this by improving the robustness of Dactyl to perturbations by using Automatic Domain Randomization (ADR), a simulation approach of generating progressively more difficult environments. ADR differs from manual domain randomization by not needing a human to specify randomization ranges.

API

In June 2020, OpenAI announced a multi-purpose API which it said was "for accessing new AI models developed by OpenAI" to let developers call on it for "any English language AI task".

Generative models

The company has produced several generative models.

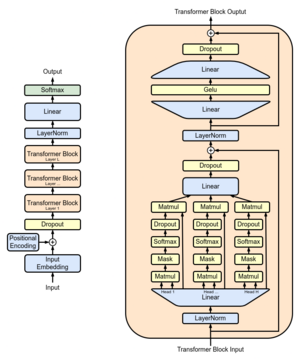

OpenAI's original GPT model ("GPT-1")

The original paper on generative pre-training of a transformer-based language model was written by Alec Radford and his colleagues, and published in preprint on OpenAI's website on June 11, 2018. It showed how a generative model of language is able to acquire world knowledge and process long-range dependencies by pre-training on a diverse corpus with long stretches of contiguous text.

GPT-2

Generative Pre-trained Transformer 2 ("GPT-2") is an unsupervised transformer language model and the successor to OpenAI's original GPT model ("GPT-1"). GPT-2 was announced in February 2019, with only limited demonstrative versions initially released to the public. The full version of GPT-2 was not immediately released due to concern about potential misuse, including applications for writing fake news. Some experts expressed skepticism that GPT-2 posed a significant threat.

In response to GPT-2, the Allen Institute for Artificial Intelligence responded with a tool to detect "neural fake news". Other researchers, such as Jeremy Howard, warned of "the technology to totally fill Twitter, email, and the web up with reasonable-sounding, context-appropriate prose, which would drown out all other speech and be impossible to filter". In November 2019, OpenAI released the complete version of the GPT-2 language model. Several websites host interactive demonstrations of different instances of GPT-2 and other transformer models.

GPT-2's authors argue unsupervised language models to be general-purpose learners, illustrated by GPT-2 achieving state-of-the-art accuracy and perplexity on 7 of 8 zero-shot tasks (i.e. the model was not further trained on any task-specific input-output examples).

The corpus it was trained on, called WebText, contains slightly over 8 million documents for a total of 40 gigabytes of text from URLs shared in Reddit submissions with at least 3 upvotes. It avoids certain issues encoding vocabulary with word tokens by using byte pair encoding. This permits representing any string of characters by encoding both individual characters and multiple-character tokens.

GPT-3

First described in May 2020, Generative Pre-trained Transformer 3 (GPT-3) is an unsupervised transformer language model and the successor to GPT-2. OpenAI stated that full version of GPT-3 contains 175 billion parameters, two orders of magnitude larger than the 1.5 billion parameters in the full version of GPT-2 (although GPT-3 models with as few as 125 million parameters were also trained).

OpenAI stated that GPT-3 succeeds at certain "meta-learning" tasks. It can generalize the purpose of a single input-output pair. The GPT-3 release paper gives an example of translation and cross-linguistic transfer learning between English and Romanian, and between English and German.

GPT-3 dramatically improved benchmark results over GPT-2. OpenAI cautioned that such scaling up of language models could be approaching or encountering the fundamental capability limitations of predictive language models. Pre-training GPT-3 required several thousand petaflop/s-days of compute, compared to tens of petaflop/s-days for the full GPT-2 model. Like its predecessor, the GPT-3 trained model was not immediately released to the public on the grounds of possible abuse, although OpenAI planned to allow access through a paid cloud API after a two-month free private beta that began in June 2020.

On September 23, 2020, GPT-3 was licensed exclusively to Microsoft.

Codex

Announced in mid-2021, Codex is a descendant of GPT-3 that has additionally been trained on code from 54 million GitHub repositories, and is the AI powering the code autocompletion tool GitHub Copilot. In August 2021, an API was released in private beta. According to OpenAI, the model can create working code in over a dozen programming languages, most effectively in Python.

Several issues with glitches, design flaws, and security vulnerabilities have been brought up.

GitHub Copilot has been accused of emitting copyrighted code, with no author attribution or license.

OpenAI announced that they are going to discontinue support for Codex API starting from March 23, 2023.

Whisper

Released in 2022, Whisper is a general-purpose speech recognition model. It is trained on a large dataset of diverse audio and is also a multi-task model that can perform multilingual speech recognition as well as speech translation and language identification.

GPT-4

On March 14, 2023, OpenAI announced the release of Generative Pre-trained Transformer 4 (GPT-4), capable of accepting text or image inputs. OpenAI announced the updated technology passed a simulated law school bar exam with a score around the top 10% of test takers; by contrast, the prior version, GPT-3.5, scored around the bottom 10%. GPT-4 can also read, analyze or generate up to 25,000 words of text, and write code in all major programming languages.

User interfaces

MuseNet and Jukebox (music)

Released in 2019, MuseNet is a deep neural net trained to predict subsequent musical notes in MIDI music files. It can generate songs with 10 instruments in 15 styles. According to The Verge, a song generated by MuseNet tends to start reasonably but then fall into chaos the longer it plays.

Released in 2020, Jukebox is an open-sourced algorithm to generate music with vocals. After training on 1.2 million samples, the system accepts a genre, artist, and a snippet of lyrics and outputs song samples. OpenAI stated the songs "show local musical coherence [and] follow traditional chord patterns" but acknowledged that the songs lack "familiar larger musical structures such as choruses that repeat" and that "there is a significant gap" between Jukebox and human-generated music. The Verge stated "It's technologically impressive, even if the results sound like mushy versions of songs that might feel familiar", while Business Insider stated "surprisingly, some of the resulting songs are catchy and sound legitimate".

Microscope

Released in 2020, Microscope is a collection of visualizations of every significant layer and neuron of eight neural network models which are often studied in interpretability. Microscope was created to analyze the features that form inside these neural networks easily. The models included are AlexNet, VGG 19, different versions of Inception, and different versions of CLIP Resnet.

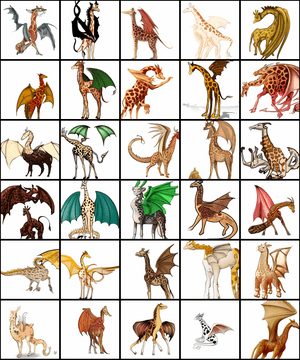

DALL-E and CLIP (images)

Revealed in 2021, DALL-E is a Transformer model that creates images from textual descriptions.

Also revealed in 2021, CLIP classifies images using textual descriptions. DALL-E uses a 12-billion-parameter version of GPT-3 to interpret natural language inputs (such as "a green leather purse shaped like a pentagon" or "an isometric view of a sad capybara") and generate corresponding images. It can create images of realistic objects ("a stained-glass window with an image of a blue strawberry") as well as objects that do not exist in reality ("a cube with the texture of a porcupine"). As of March 2021, no API or code is available.

DALL-E 2

In April 2022, OpenAI announced DALL-E 2, an updated version of the model with more realistic results. In December 2022, OpenAI published on GitHub software for Point-E, a new rudimentary system for converting a text description into a 3-dimensional model.

DALL-E 3

In September 2023, OpenAI announced DALL-E 3, a more powerful model better able to generate images from complex descriptions without manual prompt engineering and render complex details like hands and text. It was released to the public as a ChatGPT Plus feature in October.

ChatGPT

Launched in November 2022, ChatGPT is an artificial intelligence tool built on top of GPT-3 that provides a conversational interface that allows users to ask questions in natural language. The system then responds with an answer within seconds. ChatGPT reached 1 million users 5 days after its launch.

ChatGPT Plus is a $20/month subscription service that allows users to access ChatGPT during peak hours, provides faster response times, selection of either the GPT-3.5 or GPT-4 model, and gives users early access to new features.

In May 2023, OpenAI launched a user interface for ChatGPT for the App Store and later in July 2023 for the Play store. The app supports chat history syncing and voice input (using Whisper, OpenAI's speech recognition model).

See also

In Spanish: OpenAI para niños

In Spanish: OpenAI para niños

- Anthropic

- Center for AI Safety

- Future of Humanity Institute

- Future of Life Institute

- Google DeepMind

- Machine Intelligence Research Institute